Openhab face detection with Frigate and CompreFace

Please read Liability Disclaimer and License Agreement CAREFULLY

This article aims to help you implement face detection in Openhab considering that you have alreay installed and configured Frigate and CompreFace.

In my case Frigate and CompreFace are running in containers under docker-compose, the configuration for it is published on My docker compose yaml file article and the Frigate configuration is published on My Frigate yaml file article.

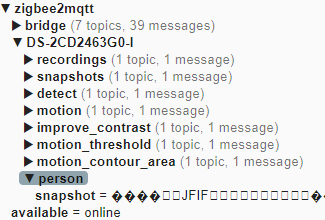

From Frigate you should have the default MQTT topics, the one that we are interested in is "person\snapshot".

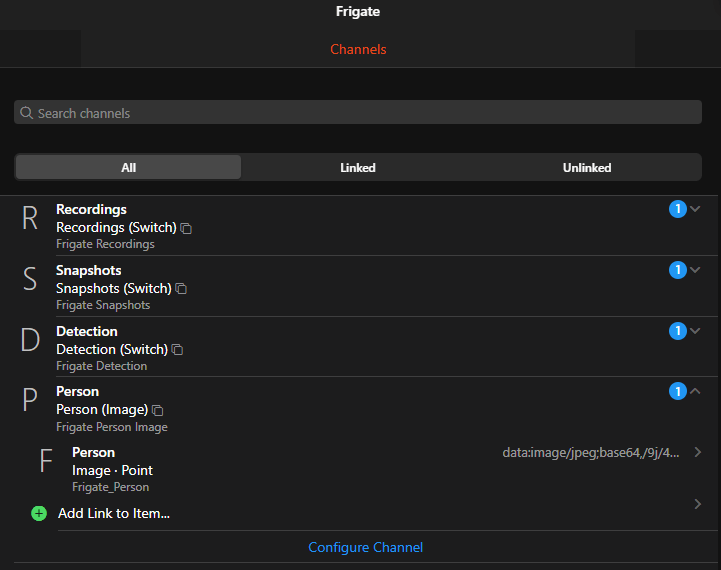

In Openhab I have created a Thing called Frigate - see below the code for it.

UID: mqtt:topic:MyMQTT:Frigate

label: Frigate

thingTypeUID: mqtt:topic

configuration: {}

bridgeUID: mqtt:broker:MyMQTT

channels:

- id: Recordings

channelTypeUID: mqtt:switch

label: Recordings

description: Frigate Recordings

configuration:

commandTopic: zigbee2mqtt/DS-2CD2463G0-I/recordings/state

stateTopic: zigbee2mqtt/DS-2CD2463G0-I/recordings/state

off: OFF

on: ON

- id: Snapshots

channelTypeUID: mqtt:switch

label: Snapshots

description: Frigate Snapshots

configuration:

commandTopic: zigbee2mqtt/DS-2CD2463G0-I/snapshots/state

stateTopic: zigbee2mqtt/DS-2CD2463G0-I/snapshots/state

off: OFF

on: ON

- id: Detection

channelTypeUID: mqtt:switch

label: Detection

description: Frigate Detection

configuration:

commandTopic: zigbee2mqtt/DS-2CD2463G0-I/detect/state

stateTopic: zigbee2mqtt/DS-2CD2463G0-I/detect/state

off: OFF

on: ON

- id: Person

channelTypeUID: mqtt:image

label: Person

description: Frigate Person Image

configuration:

stateTopic: zigbee2mqtt/DS-2CD2463G0-I/person/snapshotWhen Frigate is detecting a person it will take a snapshot that will be published on zigbee2mqtt/DS-2CD2463G0-I/person/snapshot.

We will have to send to CompreFace the snapshot from Frigate, this snapshot can be accessed in browser at http://FrigateIP:FrigatePort/api/FrigateCameraName/latest.jpg

I'm assuming that you have installed, configured and trained CompreFace.

Go to /etc/openhab/scripts (this is where my Openhab is installed, adjust the path based on your installation) and create a new script file, let's name it sendToCompreface.sh, then paste the below content and replace the paths and names based on your needs. After the file is saved make sure that file permissions includes Execute for user openhab.

You can change the "...?face_plugins=" so the reply from ComreFace will include more detailes like "?face_plugins=landmarks,gender,age,pose", consult CompreFace API documentation for detailes, I am interested only in gender and age plugins from CompreFace.

#!/bin/bash

# Set the variables

API_URL="http://CompreFace_IP:CompreFace_Port/api/v1/recognition/recognize?face_plugins=gender,age"

API_KEY="YourApiKey"

IMAGE_URL="http://Frigate_IP:Frigate_Port/api/YourCameraName/latest.jpg"

curl ${IMAGE_URL} --output /etc/openhab/scripts/latest.jpg --silent

# Execute the curl command

curl -X POST "$API_URL" \

-H "Content-Type: multipart/form-data" \

-H "x-api-key: $API_KEY" \

-F "file=@/etc/openhab/scripts/latest.jpg"You can test the scrip by going into the folder

cd /etc/openhab/scripts/and then type the command

bash sendToCompreface.shYou should see the script output on the screen.

I had to copy the latest.jpg file in the script folder as I had issues getting it directly from http://FrigateIP:FrigatePort/api/FrigateCameraName/latest.jpg.

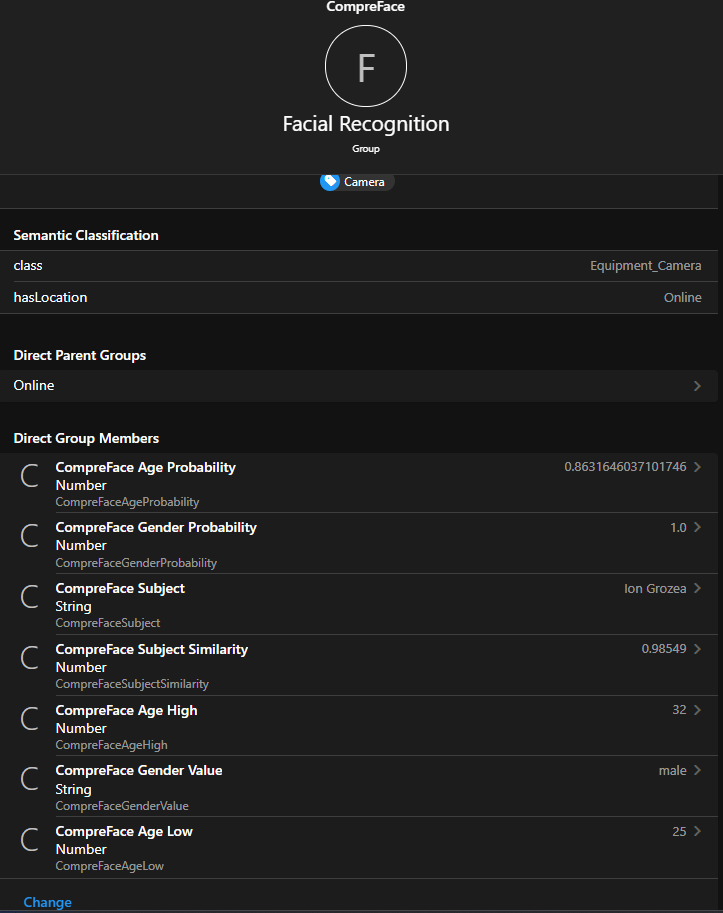

In Openhab I created a Model called Facial Recognition and the items shown in the picture below are added in it.

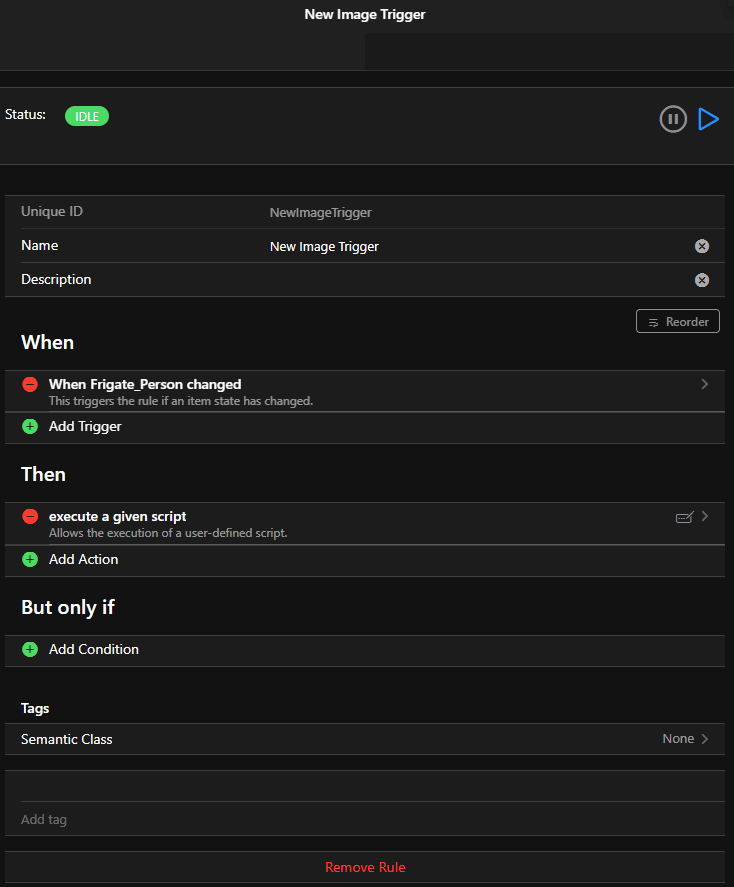

After this step is completed go to Setting, Rules and create a new rule, I named it New Image Trigger see below screenshot.

The Rule code is posted below.

//var logger = Java.type('org.slf4j.LoggerFactory').getLogger('org.openhab.rule.' + ctx.ruleUID);

var HTTP = Java.type("org.openhab.core.model.script.actions.HTTP");

var Exec = Java.type("org.openhab.core.model.script.actions.Exec");

var script = Exec.executeCommandLine(java.time.Duration.ofSeconds(10),"/etc/openhab/scripts/sendToCompreface.sh");

//logger.info("HTTP POST Response = " + script);

// Make sure the file size in kb order

var json = script.toString().split("k");

json = JSON.parse(json[2]);

items.getItem("CompreFaceAgeProbability").postUpdate(json.result[0].age.probability);

items.getItem("CompreFaceAgeLow").postUpdate(json.result[0].age.low);

items.getItem("CompreFaceAgeHigh").postUpdate(json.result[0].age.high);

items.getItem("CompreFaceGenderValue").postUpdate(json.result[0].gender.value);

items.getItem("CompreFaceGenderProbability").postUpdate(json.result[0].gender.probability);

items.getItem("CompreFaceSubject").postUpdate(json.result[0].subjects[0].subject);

items.getItem("CompreFaceSubjectSimilarity").postUpdate(json.result[0].subjects[0].similarity);

To sum up, every time Frigate till post a new image with a person detected, the rule New Image Trigger will be triggered and the script sendToCompreface.sh will be executed, the latest.jpg will be copied in /etc/openhab/scripts and then sent to CompreFace for recognition. The result is received by the rule, processed and the Facial Recognition Items are updated, from this point on you can use them as you see fit.

Running the script from Openhab ignores the --silent option, so var script will contain the copy operation result also. The JSON text starts right after the file size, so I have used the k as a marker to split the string.

Comments powered by CComment